In the digital marketing industry, are there any two words scarier than “Technical SEO”? To most people, no.

When you’re trying to increase website traffic, technical SEO is an essential task. And you don’t need to hire an expensive web developer or SEO professional to accomplish it – you can actually do a lot of it yourself!

So, fire up your computer, stretch those fingers and get ready to assume the role of a master search engine optimizer. Here are four technical tips that almost anyone can do to increase organic search visibility.

What is Technical SEO?

In order to successfully drive organic search traffic, your website must be accessible to search engines and your visitors. Search engines send small applications called crawlers or robots, to crawl your web pages. They navigate throughout your site by following your internal linking structure.

Once your website is accessible to search engine robots, your web pages are submitted to be indexed by the search engines. Being indexed allows your web pages to begin ranking in search engine result pages (SERPs) for the appropriate queries.

If you haven’t yet, set-up Google Search Console to get started with tracking, analyzing and auditing your technical SEO strategy.

1) Robots.txt

Robots.txt is a common name of a text file that is uploaded to a website’s root directory and linked in the HTML code of the site. If there are files and directories that you do not want to be crawled by search engines, you can use a robots.txt file to define where the robots should not go.

Having a robots.txt, even one that doesn’t contain anything shows that you allow search engines to crawl your site and that they may access it.

Your WordPress installation may require certain administrative areas to be blocked by search engine crawlers. If you don’t need to block bots from crawling anything, your robots.txt file should resemble the following syntax:

User-agent: *

Disallow:

Once you have your robots.txt file written (copy and paste the example above to get yourself started), save it and upload it to the root directory of your site. If you’re operating GoDaddy, you’ll need to go to cPanel and access File Manager, the first choice in the “Files” section. Once you’re in Files, locate, “public_html” folder. Press “upload” and upload your robots.txt file.

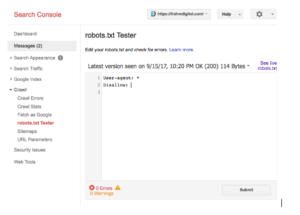

If you have Search Console setup, you can go to your account, on the right sidebar, click Crawl > robots.txt Tester. Here, you can submit, edit and confirm your file is working.

2) Submitting an XML Sitemap Index

A Sitemap is an XML file that lists URLs for a site along with additional metadata about each URL, such as updates, how often it’s modified and how important it is, relative to other site URLs. With this, search engines crawlers can better understand the site. XML sitemaps are a simple way for webmasters to inform search engines robots about website pages that are available for crawling.

If you are worried about doing technical SEO yourself, reaching out to a professional may be a good choice. Learn more about choosing the right partner for your needs in our post, How to Choose an SEO Agency.

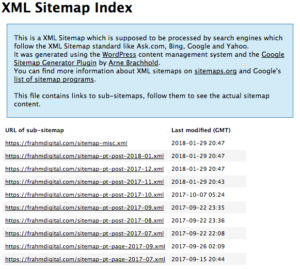

Implement a sitemap index at the URL: yoursite.com/sitemap.xml. Ensure that your XML sitemap auto-includes new pages created for your site. If this is not possible, then you’ll have to update the sitemap as new pages are created. To generate a sitemap, download a trusted WordPress plugin, such as Google XML Sitemaps, Yoast SEO or The SEO Framework. All three of these plugins will generate, submit and automatically update your sitemap.

XML sitemap example:

3) URL Structure Best Practices

URLs describe a site or page to visitors and search engines. Keeping them relevant, concise and keyword-oriented is crucial to ranking a website. When implementing your website’s URLs follow the best practices below:

-

- URL Structure

- Create URLs based on page content. For example, refer to the name of the product or service on specific pages or look to relevant/high search volume keywords for category pages.

- Place target keywords toward the beginning of the URL.

- Use dashes (-) to separate words in the URL. Do not include any other punctuation.

- URLs should be concise but must be unique – include distinguishing information (e.g. model, size, color) only when necessary, and place these terms toward the end of the URL.

- Once a page is in Enabled or Published status, any changes to the URL will require a 301 redirect from the old URL to the new URL. Work with the Development team to either implement redirects manually or ensure settings are in place to implement this automatically.

- URL Structure

4) Text-HTML Ratio

Your text to HTML ratio indicates the amount of actual text you have on your web page compared to the amount of code. This warning is triggered when your text to HTML is 10% or less. Search engines have begun focusing on pages that contain more content. That’s why a higher text to HTML ratio means your page has a better chance of getting a good position in search results.

Less code increases your page’s load speed and also helps your rankings. It also helps search engine robots crawl your website faster.

To understand your text to HTML ratio, split your web pages’ text content and code into separate files and compare their size. If the size of the code file exceeds the size of the text file, review the page’s HTML code and consider optimizing its structure and removing embedded scripts and styles. You can also increase the on-page content with more body copy, headlines, image captions and other text-based items. While the jury is still out on how much this affects your online visibility, this technical SEO strategy stresses the importance of having a lot of relevant, useful content on your web pages that creates a better user experience. After all, content is what keeps users coming back for more!

Best Of Luck With Your Technical SEO Implementations

If you’ve been putting off essential technical SEO updates because they seem complex, challenging – even a little frightening – make it easy on yourself by using these four strategies to improve your website’s performance today!